How To Run A Data Quality Audit For Go-To-Market [Framework]

What's on this page:

Want more? Head over to our RevOps hub:

Teams don’t actively choose to run go-to-market (GTM) motions on shaky data. But if you assume that your data is ‘good enough’, then you may as well be. Without regular audits on data quality, you may find cracks in pipeline, forecasting, segmentation, personalisation, and (increasingly) large language model (LLM) answers.

In conversations with Toni Mastelić, VP of Data at Cognism and Sandy Tsang, VP of RevOps at Cognism, one thing came through clearly: a GTM data quality audit doesn’t need to be mystical or massive. But it does need a clear standard for what “good” looks like, and is repeatable to keep it that way.

As Sandy put it, “It’s kind of like spring cleaning your house. Do you do it weekly or just once a year? And then how big of a job is that?”

This blog breaks the audit down into a practical framework you can apply, whether you’re auditing your CRM, data warehouse, GTM tech stack, or the data partner that feeds it.

What leaders underestimate when they assume data is “good enough”

When leadership teams say their CRM data is “good enough,” they’re usually thinking about visible risk. But as Sandy and Toni both pointed out, the most dangerous risks are often the ones that don’t show up until decisions are already being made.

Sandy: Compliance and revenue risk show up at the leadership level

Sandy framed the risk first through the lens of governance and commercial decision-making.

From a compliance standpoint, “good enough” isn’t subjective at all:

“You have someone like a Chief Information Security Officer, who would probably want to sense check that… we have clear documentation of the source of the data, the permissions that we’ve collected, all that stuff.”

If you can’t clearly prove where your data came from, how it was collected, and whether it can legally be used, then “good enough” quickly becomes a liability.

But Sandy’s bigger concern sat on the revenue side. Even when CRM data looks clean on the surface, it often isn’t strong enough to support the kinds of financial decisions leadership actually care about:

“Good enough data is not going to be able to support you on really great analysis for your product packaging, pricing strategies and renewal strategies. It will give you a guideline direction, but it’s not going to properly give you your full financial impact.”

In other words, “good enough” data may point you in roughly the right direction, but it rarely gives leaders the confidence to make great decisions. Over time, that gap shows up as suboptimal pricing, missed expansion opportunities, and avoidable churn.

Toni: “Good enough” hides operational and data quality risk

Toni approached the problem from a data and execution perspective, looking at what actually breaks when teams rely on poor data quality.

In practice, the first issues don’t show up in dashboards or audits. They show up in day-to-day GTM work.

Contact records that look valid stop working. Reps reach the wrong people. Relationships between fields quietly break as people move roles, change companies, or as company attributes drift out of date.

“It’s not enough to get the mobile number and get it verified one time. It’s no longer verified after some time.”

On the surface, nothing looks obviously wrong. Fields are filled. Data appears complete. Systems continue to function. But usability erodes underneath.

Teams start compensating without realising it:

- SDRs second-guess the data they’re given.

- Ops teams patch workflows with exceptions.

- Leadership decisions rely on increasingly narrow slices of reality.

The most dangerous failures are the ones that don’t announce themselves. Data quality can look stable while becoming progressively less representative of the market it’s meant to describe.

By the time problems surface in performance, forecasting, or segmentation, the issue isn’t a single broken field; it’s that the data has slowly stopped reflecting how the market actually looks.

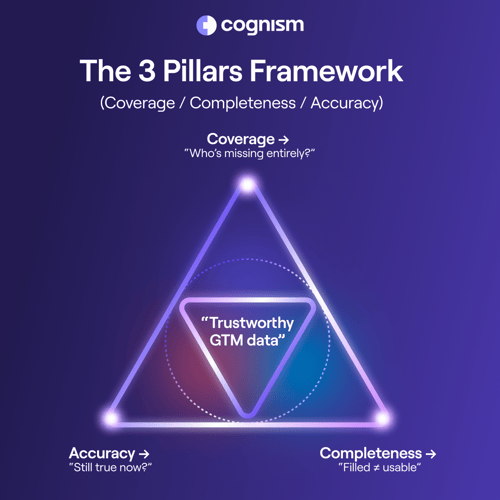

Our GTM data quality audit framework: Coverage, completeness, accuracy

Toni offered a clean way to audit data quality that cuts through the noise:

“You can think of this like three pillars: Coverage, completeness, and accuracy.”

Here’s what those pillars mean in practice, and where teams tend to fool themselves.

Pillar 1: Accuracy is about whether the data is still correct now

Accuracy isn’t about whether the data was correct at some point. It’s about whether it’s still correct when you’re acting on it.

Toni’s view is that accuracy quietly erodes over time, even when data initially looks solid. A record can be verified, structured, and quietly wrong simply because it hasn’t been revisited:

“You might have an email, maybe it was even verified, but it was verified a long time ago, so it’s no longer the right email.”

This decay and degradation doesn’t just affect contact data. Company-level attributes suffer from the same problem:

“Even when you’re doing company information like revenue or headcount, is the number that you have still accurate three months down the line?”

The risk is subtle. Nothing necessarily looks broken. Fields are filled. Reports still run. But the decisions being made on top of that data slowly drift away from reality.

Pillar 2: Completeness doesn’t say much on its own

Completeness is the most seductive metric in a GTM data audit, because it’s immediately visible.

Empty field? Bad. Filled field? Good.

The problem is that completeness on its own says nothing about whether the data is usable. Fields can be fully populated while still being outdated, incorrect, or misleading. A record can look “perfect” in a CRM and still fail the moment a rep tries to act on it.

This is where many teams, and even some data evaluations, go wrong. Completeness is easy to optimise for. You can fill every field quickly. But unless those fields are accurate and up to date, completeness becomes a false signal of quality rather than proof of it.

Worse, over-rewarding completeness can actively skew decision-making. Datasets that look richer may be prioritised over smaller, more accurate ones.

In competitive evaluations, this often leads teams to back the option that appears more comprehensive, even when the underlying data performs worse in real GTM execution.

Pillar 3: Coverage often gets mistaken for completeness

Coverage is where most go-to-market data audits quietly fail, not because teams ignore it, but because they mistake completeness for coverage.

Many teams assume that if records are fully populated, the dataset must be representative. Toni was clear that this is a dangerous leap. You can have data that looks perfect on paper and still be fundamentally misleading:

“It’s easy to have 100% completeness. You just put random garbage in the fields, and you have 100% completeness, but is the data actually accurate?”

Completeness only tells you what exists inside the dataset. Coverage is about what exists outside it; the people, accounts, and buying roles that never appear at all.

This is where false confidence creeps in. Teams audit the records they already have, see high completeness and strong performance, and conclude that the system works. But that conclusion is based on a partial view of the market.

As Toni explained, the real risk is invisible:

“You get 100 profiles, and you say, okay, they actually look great, but you have no idea that there are thousands of other profiles that you should actually be calling because there is nothing in the dataset.”

This is why chasing “100% data” is a distraction. You will never have complete coverage of everything. What matters is whether your data covers the right people, in the right companies, for the decisions you’re trying to make.

“You will never have a hundred percent of everything; you just focus on what our clients are actually interested in.”

In practice, coverage gaps show up as:

- Decision-makers are missing entirely from target accounts.

- Buying roles beyond familiar personas are consistently underrepresented.

- Regions or segments constrained not by demand, but by visibility.

Over time, GTM teams adapt around these gaps without consciously recognising them. Outreach narrows. Segmentation reinforces itself. Strategy becomes shaped by who is easiest to reach, not who matters most.

The framework for how to audit your GTM data quality

Step 1: Define what “trustworthy” means

For Sandy, trustworthy GTM data isn’t about having accurate fields in isolation. It is about whether the data holds together as a joined-up picture across the customer lifecycle:

“We need to have the whole picture joined up of what a customer truly looks like.”

Without that, teams struggle to answer the questions that actually matter: LTV, expansion likelihood, churn risk, or what really changed in this segment?

As Sandy pointed out, even well-maintained data becomes unreliable if definitions evolve, but documentation and alignment don’t. When different teams operate on slightly different versions of “truth”, the data may still look correct, but it’s no longer trustworthy.

From Toni’s perspective, the test is simpler: Trustworthy data is data you would actually act on.

“You need to be able to take that data and be able to stake a decision on it.”

So before you open a spreadsheet or run a field-by-field audit, agree on your audit’s trust test. For most teams, it comes down to three questions:

- Can we explain this number?

- Can we defend it cross-functionally?

- Would we stake a commercial decision on it?

If the answer is no, you don’t have trustworthy data, regardless of how complete or clean it looks.

Step 2: Pick the scope and validate it with samples

One of the biggest mistakes teams make in a GTM data audit is trying to assess everything at once. Full coverage of all data, all regions, and all records isn’t just unrealistic, but it’s unnecessary.

So before you run a field-by-field audit, define a focused scope based on where data quality actually matters. For most teams, that means auditing around:

- Your ICP accounts and key segments.

- Your target regions.

- Buying committee roles you actively sell to.

- Your highest-impact workflows (routing, scoring, outbound, forecasting, lifecycle comms).

Once that scope is clear, Toni’s advice is to pressure-test it using representative samples, rather than attempting a full data audit upfront. Instead of checking every record, he recommends taking a statistically meaningful sample and validating it against ground truth:

“This is statistically completely viable, because if you take a big enough sample, you can tell something about their data. You don’t need to check all of their data. We take a sample of, say, 1000 records, and then we collect ground truth data for the sample, and then we compare it.”

Sampling also helps teams distinguish where problems actually sit. Accuracy issues require manual validation against reality, while completeness can often be assessed programmatically at scale.

Step 3: Don’t treat a data refresh the same as an audit

One of the most common mistakes teams make is treating data refresh and data audit as the same activity. They’re related, but they solve different problems.

Refreshing data is operational. It keeps records up to date as reality changes. But auditing data is strategic. It checks whether your data still supports your go-to-market plays and overall approach.

Refreshing data is keeping information current

Keeping GTM data truly up to date is far harder than it looks. Toni was clear that relying on “triggers” for freshness simply doesn’t work in the real world:

“You cannot do event-based data updates. You need to do time-based updates at specific intervals because no one will be sending us a trigger that some guy changed companies.”

People don’t announce job changes in clean, machine-readable ways. Even when signals exist, they’re often delayed or incomplete. That’s why freshness needs to be managed deliberately, on a defined cadence.

For high-value profiles, Toni described a realistic standard we use in-house (bearing in mind we are a data organisation, data is what we do):

“We update the person profiles for high tier profiles every month.”

But more frequent refresh cycles aren’t automatically better. For many organisations, overly aggressive refreshes simply increase cost and noise without meaningfully improving accuracy.

This is where the gap between data providers and most GTM teams becomes important to acknowledge.

For a data company, maintaining freshness at scale is the job. The tooling, processes, and resourcing are designed around continuous validation and refresh. For most organisations, it isn’t, and trying to replicate that internally often becomes unsustainable.

That doesn’t mean GTM teams shouldn’t audit freshness. It means they need to be realistic about:

- Which segments actually warrant frequent refresh.

- Where internal ownership ends.

- And where a specialist data partner can shoulder that burden more effectively.

Working with a specialist data provider helps reduce this burden by treating freshness as infrastructure. Instead of asking RevOps, SalesOps, or MarOps teams to continuously chase job changes and reconcile inconsistencies, that operational load is absorbed upstream.

Practical freshness checks you can run internally

Even if you’re not refreshing data monthly, you can still audit whether freshness is becoming a risk:

- % of contacts touched or verified in the last 30/60/90 days (by segment).

- Job-change rate and mismatch rate (contact with the company).

- Bounce rates and wrong-number rates are trending over time.

- % of “unknown” or blank fields creeping up (often a sign enrichment or syncs are failing).

If these metrics are deteriorating, especially in high-value segments, freshness is already impacting GTM execution, whether it’s visible yet or not.

Auditing data: checking fitness for strategy

Auditing is different. A GTM data audit is about stepping back and asking whether your data still reflects your strategy, definitions, and priorities. Sandy’s recommendation for how often to do this was deliberately practical:

“I think annually.”

Not because nothing changes during the year, but because an annual checkpoint stops small drift from turning into a full-blown rebuild:

“You want to do it so that it doesn’t balloon into a huge problem.”

For most organisations, the cadence that works in practice looks like this:

- Annual: Full GTM data audit and definition and version review.

- Quarterly: KPI health checks and pipeline flow validation.

- Monthly: Freshness checks for Tier 1 segments and highest-impact workflows.

In short: refresh continuously, audit deliberately.

Step 4: Measure accuracy the right way

By this point, you’ve scoped the data that matters most and checked whether it’s being refreshed often enough. The next question is simpler, and more uncomfortable:

Is this data right?

Toni’s approach to accuracy focuses on verifying reality rather than just inspecting systems. The key here is ground truth. Accuracy can’t be inferred from internal consistency alone. You need an external point of reference, manual verification, trusted third-party sources, or direct confirmation to know whether a record is still true.

AI can help accelerate this step by gathering baseline information, but human validation remains essential. Toni explains:

“We can run AI to collect the baseline data and then get our data annotators to check. This speeds up the process a little bit.”

In other words, automation can reduce effort, but judgment is still required to confirm accuracy.

You might also like to read: What is AI-ready data?

Practical accuracy checks to include in your audit

- Random validation of high-impact fields (job title, company, region, seniority, email, phone).

- Relationship accuracy checks (does this person still work at this company?).

- Segmentation accuracy (are accounts and contacts being classified correctly?).

- Duplicate and merge errors (one real entity split across records, or multiple entities collapsed into one).

If accuracy breaks down in these areas, downstream systems don’t just perform poorly; they also actively mislead teams. And unlike freshness issues, accuracy errors are often invisible until revenue outcomes start to suffer.

Step 5: Check the data completeness

Completeness is still useful. Just treat it as a supporting metric, not the goal. A simple way to do this:

- Track completeness for each critical field by segment (for example, Tier 1 accounts or active pipeline accounts, not your entire database).

- Pair completeness with accuracy and freshness thresholds so a field only “counts” if it’s both filled and trustworthy.

- Flag “too-perfect completeness” as a warning sign - it often indicates inferred, duplicated, or low-confidence data rather than genuine coverage.

Completeness is most useful when it’s directional. Sudden drops can signal broken enrichments, sync failures, or ownership gaps. Gradual increases in “unknown” or blank values often precede performance issues in pipeline metrics.

If completeness is rising but conversion, connect rates, or qualification quality aren’t improving, that’s a strong signal that the data looks healthy but isn’t doing useful work.

Step 6: Check coverage where it actually matters

The goal of this step is to surface gaps that directly limit pipeline today, not to assess database size in the abstract.

You can pressure-test data coverage in three places:

Decision-maker coverage

-

Where do reps consistently fail to find senior buyers?

-

Which roles repeatedly need manual research or workarounds?

-

Where do deals stall because the right stakeholder never enters the system?

Buying group depth

-

Which segments only surface one or two contacts per account?

-

Where are technical, financial, or regional stakeholders missing?

-

Are certain personas only visible in late-stage deals?

Regional and segment coverage

-

Which regions underperform despite strategic focus?

-

Where does pipeline lag behind headcount or TAM expectations?

-

Are “low-performing” segments actually under-covered rather than low-intent?

Another useful signal is where teams spend disproportionate time compensating for gaps:

- Excessive LinkedIn sourcing.

- Spreadsheets are maintained outside the CRM.

- Manual enrichment requests.

- Repeated exceptions in routing or scoring rules.

These are indicators that coverage is limiting scale.

Where AI fits in a GTM data audit

Both Toni and Sandy landed on the same theme: AI is useful, but only when you treat it like support, not authority.

Sandy’s framing:

“You can definitely leverage AI to help you find the irregularities, the anomalies and sense-checking your approach…”

But:

“Ultimately, you know your data best”

And she offered the best mental model: “It’s kind of like a coach rather than giving you the answer.”

Toni went deeper on the core risk with LLMs: they’ll try to “complete” missing data by making it up.

“If the LLM is working on top of data which is not complete, it’ll make it complete, by hallucinating data.”

And he warned that fragmentation makes it worse, because LLMs struggle with context and relationship mapping:

“Putting them in the right context and actually building a profile out of it would be extremely hard; it would find one information from this file, one from that file, and it would give you something that looks really high quality, but it’ll be completely made up.”

What a data quality audit is really about

A GTM data audit isn’t about chasing perfect data. It’s about knowing, with confidence, whether the data you rely on still reflects how your market, customers, and strategy actually look today.

What Sandy and Toni both made clear is that data rarely fails loudly. It drifts. Definitions evolve. Freshness decays. Coverage narrows. And teams adapt around those gaps until decisions start feeling harder to defend.

That’s why audits matter. Not as one-off clean-ups, but as a deliberate checkpoint. Refreshing data keeps information current. Auditing ensures that information remains trustworthy, aligned, and fit for purpose.

For most organisations, the goal isn’t to do everything internally. It’s to be clear about:

- What you need to trust.

- Where freshness and coverage matter most.

- And which parts of the workload should be treated as infrastructure.

If your GTM data supports confident decisions, your audit is doing its job. If it doesn’t, no amount of “good enough” will compensate for the cost of getting it wrong.

/Data%20quality%20audit/data-quality-audit-card.webp)

/Lead%20routing%20tools/lead-routing-tools-resource-card.webp)